Associate Professor Lilian Mina, Ph.D., is director of the writing program in the Department of English. She used generative AI tools in her upper-level Professional Writing course in fall 2023.On the first day of fall semester this August, something unusual happened to Lilian Mina, Ph.D. Mina, an associate professor in the Department of English, is the head of the writing program at UAB and current president of the national Council of Writing Program Administrators. She was teaching a special topics course in professional writing and began by asking the class — a mix of graduate students and upper-level undergrads — whether they had any questions about the syllabus.

Associate Professor Lilian Mina, Ph.D., is director of the writing program in the Department of English. She used generative AI tools in her upper-level Professional Writing course in fall 2023.On the first day of fall semester this August, something unusual happened to Lilian Mina, Ph.D. Mina, an associate professor in the Department of English, is the head of the writing program at UAB and current president of the national Council of Writing Program Administrators. She was teaching a special topics course in professional writing and began by asking the class — a mix of graduate students and upper-level undergrads — whether they had any questions about the syllabus.

“It’s the most discussion I have ever had around a syllabus, I can tell you that,” she said. The key section: a short paragraph on generative AI. “Everyone wanted to talk about that,” Mina recalled. She summarized her position to the students in this way: “I’m not going to say we’re not going to use it.” That turned into a wide-ranging discussion “on what learning really means, on the value of grappling with ideas before you express them yourself, and what generative AI platforms mean for their future jobs,” Mina said.

Mina had been preparing for this conversation throughout the summer. In May, Department of English Chair Alison Chapman, Ph.D., had appointed Mina and three other faculty members to a task force charged with drafting the department’s response to generative AI. Mina had spent the summer reading, watching webinars and digging into the tools themselves: OpenAI’s ChatGPT, Google’s Bard and their cousins.

"Every time it was the same narrative.... and every time, we were proven wrong."

But Mina had a unique perspective of her own. One of her research topics is the use of digital technology in writing, and she is in the middle of a book project focused on how writing instructors engage in professional development. The spiral of hype, hysteria and educator over-reaction around AI looked pretty familiar. “We don’t want to treat this in the same way television, computers, the internet and social media were all treated,” Mina said. “Every time it was the same narrative: ‘It will kill writing; students won’t learn how to write.’ And every time, we were proven wrong.”

Related stories

Love it or hate it, generative AI is not going away

This story: Hands-on review: What the president of a national group of writing program leaders thinks of ChatGPT

5 prompts that explain how a writing professor flipped the script on AI

This business professor gave his students an object lesson in trusting AI over human judgment

So Mina decided to face generative AI head-on in her professional writing course this fall. “If I let my students leave without knowing how to deal with AI, I haven’t done my job,” she said. “These students are either in or about to reach the job market. It is very important for these students to understand how generative AI is going to affect their jobs. We can’t just put our heads in the sand.”

As the semester wound down in November, Mina shared some of the AI-based activities she has incorporated in her course and the student reactions.

Activity 1: How will we use AI in this class? A group discussion.

During their first class meeting, Mina and her students agreed that they would revisit the subject of AI use before each major assignment, with students allowed to use AI in a way tailored to the specific learning outcomes and scope of each project. (These projects included creating a podcast and a video for a community partner, the Alabama Disability Advocacy Program.) Any use of AI would have to be carefully documented, Mina told the class — and not primarily because of plagiarism or academic integrity concerns. “You will be professionals,” Mina said. “If you are using these tools in the workplace, you will be expected to document how you use them. This is part of your training in this class.”

Activity 2: Limited assistance on a podcast project.

For their podcast, the students and Mina decided on two approved uses for AI: generating transcripts of their interviews and offering ideas on ways to organize the podcast — after they had developed the idea, outline and basic script. (Using AI was not required, though; students could, and did, opt to do without it.) Mina discussed fabrication, bias and other pitfalls of current AI models, and students had to fact-check all AI output.

Activity 3: Hands-on experience with AI image and video generation.

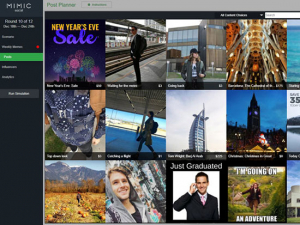

Before their video project, the students worked in class in groups to generate images and short video clips using one of several AI platforms that Mina had selected. The goal, Mina said, “was to see whether these images or videos could be helpful” in combination with the main content of the project: video interviews with adults who had cognitive abilities. Each group had to generate three to five images and clips, then show their work to the class.

“Many of these students will join the workforce as content creators,” Mina said. The activity was very enlightening for two reasons, she notes. She had some very advanced students who already knew exactly how to craft prompts to generate the images they wanted. And all the students were up to date on the latest trends on social media, choosing to generate images and video that would match those trends.

The students were able to identify bias, limitations and stereotypes in the generated images, Mina notes. But many of them, even the savvy ones, said, “I don’t see myself using these platforms in a professional setting because of all the problems I have seen in the outcomes,” Mina said. In addition to the problematic bias and stereotyping, the results would just be “easily dismissed as low-quality work,” the students said.

And despite the lightning-fast responses of AI tools, actually getting them to do what you want takes a great deal of time and patience. “They said, ‘This just takes too long,’” Mina recalled.

Two groups did see potential in using the low-quality generated video to help illustrate sections of interviews about incidents (such as being hospitalized) that would be difficult to film or depict in other ways. And generated images could be helpful in storyboarding video projects for client review, the students suggested.

Activity 4: Using AI in research

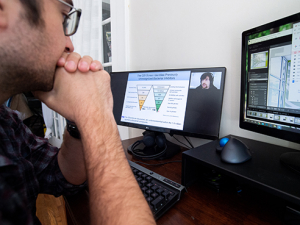

In a presentation at the Center for Teaching and Learning in October, Mina offered several examples of how generative AI can be helpful during the research phase of projects — using her own research for her current book as a real-life example. “Research is always a source of trouble for students in the EH 102 [second semester of First-Year Composition] course, which is focused on research writing,” Mina said. But research also is a continuing struggle for graduate students and even for experienced academics, she adds. Generative AI can be helpful in refining search topics and in properly formatting search queries for library databases, Mina explained. (Give ChatGPT or Bard a list of search terms, and it can add all the fussy quotation marks and “AND”s needed for a Boolean query in seconds, for instance.)

Looking back at the semester in progress, “it was very interesting to see that many of my students were resistant to using AI,” Mina said. “That is contrary to the current narrative that ‘students are just going to use it instead of writing.’ These students, in this class at least, told me, ‘I want to do the work myself. I want to use my own brain, not outsource it to a machine. I want to continue to grapple with writing myself.’”