NSSE was administered electronically in spring 2022 to first-year freshmen and seniors. Of 1,293 total respondents, 645 were first-year students and while 648 were seniors.

UAB student responses on Discussions with Diverse Others were comparable to the top 10% and significantly higher than the top 50% of 2021 and 2022 institutions participating in NSSE.

- Among first-year students, 64% frequently had discussions with people with different political views, 76% frequently had discussions with people from a different economic background, and 81% frequently had discussions with people from a different race or ethnicity.

In 2022, 86% of UAB seniors had participated in one or more High-Impact Practices (HIPs).

- UAB seniors reported significantly higher participation than peers in all HIPs except Study Abroad.

Perceived gains among seniors (how much their experience at UAB contributed to their knowledge, skills, and personal development) grew in multiple areas from 2020 to 2022.

- Working effectively with others increased by 7%.

- Acquiring job- or work-related knowledge and skills increased by 6%.

- Being an informed and active citizen improved by 5%.

- Analyzing numerical and statistical information as well as speaking clearly and effectively each increased by 2%.

Regarding academic challenge, first-year and senior students scored highly on self-reports of courses requiring them to do their best work. Additionally, both first-year students and seniors reported greater institutional emphasis on academics than their counterparts at SACSCOC peer institutions.

UAB students reported a lower frequency of student‐faculty interactions than did faculty (in FSSE 2022). Even so, UAB students gave high ratings for the quality of interactions with faculty.

UAB Program Goals & Student Learning Outcomes Guide

As an educational institution, The University of Alabama at Birmingham’s overall goal and purpose is to successfully produce student learning. Consequentially, a vital part of maintaining and measuring that success is the continued evolution and evaluation of Program Goals and Student Learning Outcomes. Additionally, UAB’s regional accreditor, The Southern Association of Colleges and Schools Commission on Colleges (SACSOC), requires evidence of continuous improvement of Student Learning Outcomes and Program Goals. Student Learning Outcomes define what the student will know and/or be able to do after completing the program. Program Goals are broad statements that should define the long-term outcomes of the program or course of study.

Together, Student Learning Outcomes and Program Goals work to describe the program’s mission and its implementation.

-

Developing Program Goals

Program Goals may be tightly related to the unit’s strategic plan. These goals are captured in UAB Planning, not in UAB Outcomes as a part of the Annual Academic Assessment Plan. Program Goals broadly define the overall goals of an education program (i.e., to graduate students who are prepared for continuing education—graduate studies, post-docs—and/or the workplace) and program elements such as:

- Total number of enrolled students

- Percentage of applicants admitted

- Percentage of minority students enrolled

- Average degree completion time

- Completion percentage rate

- Number of graduates

Program Goals should be informed by workplace and graduate studies expectations for program graduates and the desire for continuous program improvement and growth.

Program Goals: Questions to Consider

- What should students know or be able to do by the end of this program?

- What do we want this program to produce/accomplish? How long do we think it will take for the program to be able to accomplish that?

Example Program Goals

Educational Program Goals:

- To graduate students who are prepared to be innovative industry leaders

- To graduate students who are prepared to lead independent research

Strategic Program Goals:

- To increase 4-year completion of the program by 5% in the next two years

- To increase the number of female engineering students by 10% in the next three years

-

Developing S.M.A.R.T. Goals for Academic Programs

S.M.A.R.T. goals are specific, measurable, achievable, relevant, and time bound.

- Specific: What do we want to accomplish?

- Measurable: How can we measure progress to know that we’ve met our goal?

- Achievable: Do we have the skills/resources/tools needed to achieve the goal?

- Relevant: Why are we setting this goal? Is it aligned with overall objectives?

- Time-bound: When will we meet the goal? How long will it take?

-

Developing Student Learning Outcomes

Student Learning Outcomes assessment is captured in UAB Outcomes as a part of the UAB Annual Academic Plan. Student Learning Outcomes are the practical student learning criteria that will lead to attaining Program Goals. A Student Learning Outcome statement should consist of an action verb and a statement that defines the knowledge/skill/ability that will be measured/observed/demonstrated.

For example, if the Program Goal is “to graduate students who are prepared to practice medicine as a general practitioner,” then one possible Student Learning Outcomes could be “the student can accurately diagnose patient case studies.”

Ultimately, Student Learning Outcomes should answer the question: “What knowledge, skills, and/or abilities will students need to meet our broader Program Goals?”

UAB Outcomes Process & Submissions

- When preparing academic annual assessment plan submissions to be sent to the UAB Outcomes Team, they should be completed using the UAB Outcomes Template. While a similar template can be used if desired, it should still contain all the sections and answer each piece of information as the provided template.

- After the template has been completely filled out, it should be reviewed using the UAB Outcomes Rubric prior to submission to the UAB Outcomes Team.

- Once it has been reviewed using the rubric and any needed revisions/edits have been made, then it should be sent to the UAB Outcomes Team.

- The UAB Outcomes Team will review submissions and provide feedback to be addressed prior to any future submissions.

FAQs

-

Why does assessment matter on an institutional level?

- Assessment informs the institution of programs’ goals and effectiveness.

- UAB’s regional accreditor, The Southern Association of Colleges and Schools Commission on Colleges (SACSOC), requires evidence of continuous improvement of Student Learning Outcomes and Program Goals as per their “Principles of Accreditation” standard 8.2a.

Standard 8.2 reads: “The institution identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides evidence of seeking improvement based on analysis of the results in…Student learning outcomes for each of its educational programs.”

-

Who should I contact about assessment questions?

This email address is being protected from spambots. You need JavaScript enabled to view it. -

Which programs are required to report?

All academic degree programs and certificate programs are required to report.

-

What is the minimum number of students for reporting?

The minimum number of students for reporting is 10 for undergraduate students and 5 for graduate/professional students as this will help protect the anonymity of our students.

American Association of Higher Education: Principles 2, 5, & 6

Principle 2: Assessment is most effective when it reflects an understanding of learning as multidimensional, integrated, and revealed in performance over time. Learning is a complex process. It entails not only what students know but what they can do with what they know; it involves not only knowledge and abilities but values, attitudes, and habits of mind that affect both academic success and performance beyond the classroom. Assessment should reflect these understandings by employing a diverse array of methods, including those that call for actual performance, using them over time so as to reveal change, growth, and increasing degrees of integration. Such an approach aims for a more complete and accurate picture of learning, and therefore firmer bases for improving our students’ educational experience.

Principle 5: Assessment works best when it is ongoing not episodic. Assessment is a process whose power is cumulative. Though isolated, “one-shot” assessment can be better than none, improvement is best fostered when assessment entails a linked series of activities undertaken over time. This may mean tracking the process of individual students, or of cohorts of students; it may mean collecting the same examples of student performance or using the same instrument semester after semester. The point is to monitor progress toward intended goals in a spirit of continuous improvement. Along the way, the assessment process itself should be evaluated and refined in light of emerging insights.

Principle 6: Assessment fosters wider improvement when representatives from across the educational community are involved. Student learning is a campus-wide responsibility, and assessment is a way of enacting that responsibility. Thus, while assessment efforts may start small, the aim over time is to involve people from across the educational community. Faculty play an especially important role, but assessment’s questions can’t be fully addressed without participation by student-affairs educators, librarians, administrators, and students. Assessment may also involve individuals from beyond the campus (alumni/ae, trustees, employers) whose experience can enrich the sense of appropriate aims and standards for learning. Thus understood, assessment is not a task for small groups of experts but a collaborative activity; its aim is wider, better-informed attention to student learning by all parties with a stake in its improvement.

Assessment Types

There are two distinct types of assessment measurements: direct and indirect.

- Direct assessments are what are typically integrated into the classroom to measure students’ direct learning of course content and evidence of student learning. Some examples of direct assessments are quizzes, test, papers, projects, portfolios, and licensure exams.

Additionally, direct assessments can be broken into two subcategories: formative and summative.- Formative assessments are low stakes assessments that can be utilized to track student learning/progress/understanding throughout a lesson or unit and can be contribute crucial information about the effectiveness of current teaching methods. Some examples of formative assessments are quizzes, draft submissions, and discussions.

- Summative assessments are more high stakes assessments that are utilized to ensure student learning at the end of a unit or course. Some examples of summative assessments include tests, papers, projects, portfolios, and licensure exams.

While formative assessments focus on smaller portions or segments of student learning throughout a unit or course, summative assessments focus on evaluating if Student Learning Outcomes have been reached.

- Indirect assessments are those that measure student views, perceptions, and reflections of their learning. Some examples of indirect assessments are self-evaluations, surveys, and post-graduation reports. Indirect assessments can provide vital feedback into student learning processes and reception of teaching practices. When performing program, course, or instructor evaluations, indirect assessments can be a key element in assessing how effective current processes and/or methods truly are.

Institutional Assessment Instruments & Resources

-

Faculty Survey of Student Engagement

The Faculty Survey of Student Engagement (FSSE) is designed to complement NSSE by measuring faculty members’ expectations of student engagement in educational practices that are empirically linked with high levels of learning and development. In addition to measuring faculty members’ expectations of student engagement in educational practices complementary to information gathered by NSSE, FSSE also collects information about how faculty members spend their time on professorial activities, such as teaching and scholarship, and the kinds of learning experiences their institutions emphasize. UAB participates in FSSE concurrently with NSSE on a two-year cycle. More information about FSSE is available at fsse.iub.edu.

UAB Faculty consistently place great value on learning and high impact practices, especially internships and capstones. Service learning and research with faculty are also highly favored. Two areas where UAB faculty and student responses are in agreement are Higher-Order Learning and Additional Academic Challenge. There are some contrasts in NSSE and FSSE results. For example, students report a lower frequency in student‐faculty interactions than do faculty. The differences in the FSSE and NSSE results indicate that a large amount of high-quality interaction and learning occurs outside of the classroom.

At 44.6%, the 2020 FSSE response rate was the highest UAB has seen in recent years. The rate of response in 2016 was 40%, 24% in 2018, and 25% in 2022.

-

First Destination Survey

The UAB First Destination Survey (FDS) is a very brief electronic survey administered each term to graduating students. The results tell us what UAB students are doing or what they plan to do when they graduate. The information collected relates to graduate school, career plans, salary and qualitative information pertaining to preparation for life after graduation.

-

National Survey of Student Engagement

The National Survey of Student Engagement (NSSE) collects information from first-year and senior students about the nature and quality of their undergraduate experience. It measures the extent to which students engage in effective educational practices that are empirically linked with learning, personal development, and other desired outcomes such as student satisfaction, persistence, and graduation. UAB participates in NSSE every two years. More information about NSSE is available at nsse.iub.edu.

-

Noel-Levitz Student Satisfaction Inventory

The Noel-Levitz Student Satisfaction Inventory (SSI) provides information for use in improving student learning and life by identifying how satisfied students are as well as what issues are important to them. It covers a wide range of institutional activities and services, from academics to student services to extracurricular activities. The SSI will be administered on a three-year cycle. More information about SSI is available at ruffalonl.com.

-

Student Learning Outcomes

UAB academic programs measure student learning through expected outcomes both inside and outside of the classroom throughout the academic program. These assessment practices help UAB to focus on the design and improvement of educational experiences to enhance student learning and support appropriate outcomes for each educational program.

- IDEA Course Evaluations

-

UAB Center for Teaching and Learning

The UAB Center for Teaching and Learning offers a variety of events, workshops, teaching resources, and classroom technology information for faculty looking to better their teaching and assessment practices.

External Assessment Resources

- Assessment Commons

- Association of American Colleges and Universities (AAC&U): Value Rubrics

- National Institute for Learning Outcomes Assessment (NILOA): Assessment Resources Archive

- National Institute for Learning Outcomes Assessment (NILOA): Equity in Assessment

- National Institute for Learning Outcomes Assessment (NILOA): Transparency Framework

American Association of Higher Education: Principles 3 & 4

Principle 3: Assessment works best when the programs it seeks to improve have clear, explicitly stated purposes. Assessment is a goal-oriented process. It entails comparing educational performance with educational purposes and expectations—those derived from the institution’s mission, from faculty intentions in program and course design, and from knowledge of students’ own goals. Where program purposes lack specificity or agreement, assessment as a process pushes a campus toward clarity about where to aim and what standards to apply; assessment also prompts attention to where and how Program Goals will be taught and learned. Clear, shared, implementable goals are the cornerstone for assessment that is focused and useful.

Principle 4: Assessment requires attention to outcomes but also and equally to the experiences that lead to those outcomes. Information about outcomes is of high importance; where students “end up” matters greatly. But to improve outcomes, we need to know about student experience along the way—about the curricula, teaching, and kind of student effort that led to particular outcomes. Assessment can help us understand which students learn best under what conditions; with such knowledge comes the capacity to improve the whole of their learning.

Establishing Program Goals & Student Learning Outcomes

Program Goals

Program Goals are broad statements that should define the long-term outcomes of the program or course of study. Program Goals should give a concise overview of what students should have learned, attained, or be able to do by the end of the program or course of study.

Example Program Goals:

- BS Elementary Education - The goal of the Elementary Education Bachelor of Science is to graduate students who are prepared to teach in K-6 classrooms.

- Master of Business Administration - The goal of the Master of Business Administration program is to graduate students who are prepared to be innovative industry leaders.

Student Learning Outcomes

Student Learning Outcomes should be informed by Program Goals.

Student Learning Outcomes are statements that define the knowledge, skills, and/or abilities that the students have and can demonstrate when they have completed or participated in a course of study or program. Student Learning Outcomes should be specific, meaningful, and measurable, observable, and/or demonstratable. Another way to think about Student Learning Outcomes is to align them with SMART objectives—specific, measurable, achievable, relevant, and time-bound.

A Student Learning Outcome statement should consist of an action verb and a statement that defines the knowledge/skill/ability that will be measured/observed/demonstrated.

Example Student Learning Outcomes:

- The student will evaluate news sources for media bias and logical fallacies.

- The student will identify all the bones and joints of the human body.

- The student will create a business proposal that appropriately demonstrates and applies business strategies and concepts.

Bloom’s Taxonomy

A helpful resource when crafting Student Learning Outcomes is to apply Bloom’s Taxonomy.

Bloom’s Taxonomy is an educational framework that has been utilized and revised by educators for decades. For more information about Bloom’s Taxonomy, please follow the link above to Vanderbilt University Center for Teaching and Learning’s page.

Armstrong, P. (2010). Bloom’s Taxonomy. Vanderbilt University Center for Teaching. Retrieved Sept. 2022 from Bloom's Taxonomy.

Armstrong, P. (2010). Bloom’s Taxonomy. Vanderbilt University Center for Teaching. Retrieved Sept. 2022 from Bloom's Taxonomy.

This page includes additional information that includes a separate taxonomy of cognitive knowledge:

- Factual Knowledge: terminology; specific details and elements

- Conceptual Knowledge: classifications and categories; principles and generalizations; theories, models, and structures

- Procedural Knowledge: subject specific skills and algorithms; subject-specific techniques and methods; criteria for determining when to use appropriate procedures

- Metacognitive Knowledge: strategic knowledge; cognitive tasks, including appropriate contextual and conditional knowledge; self-knowledge

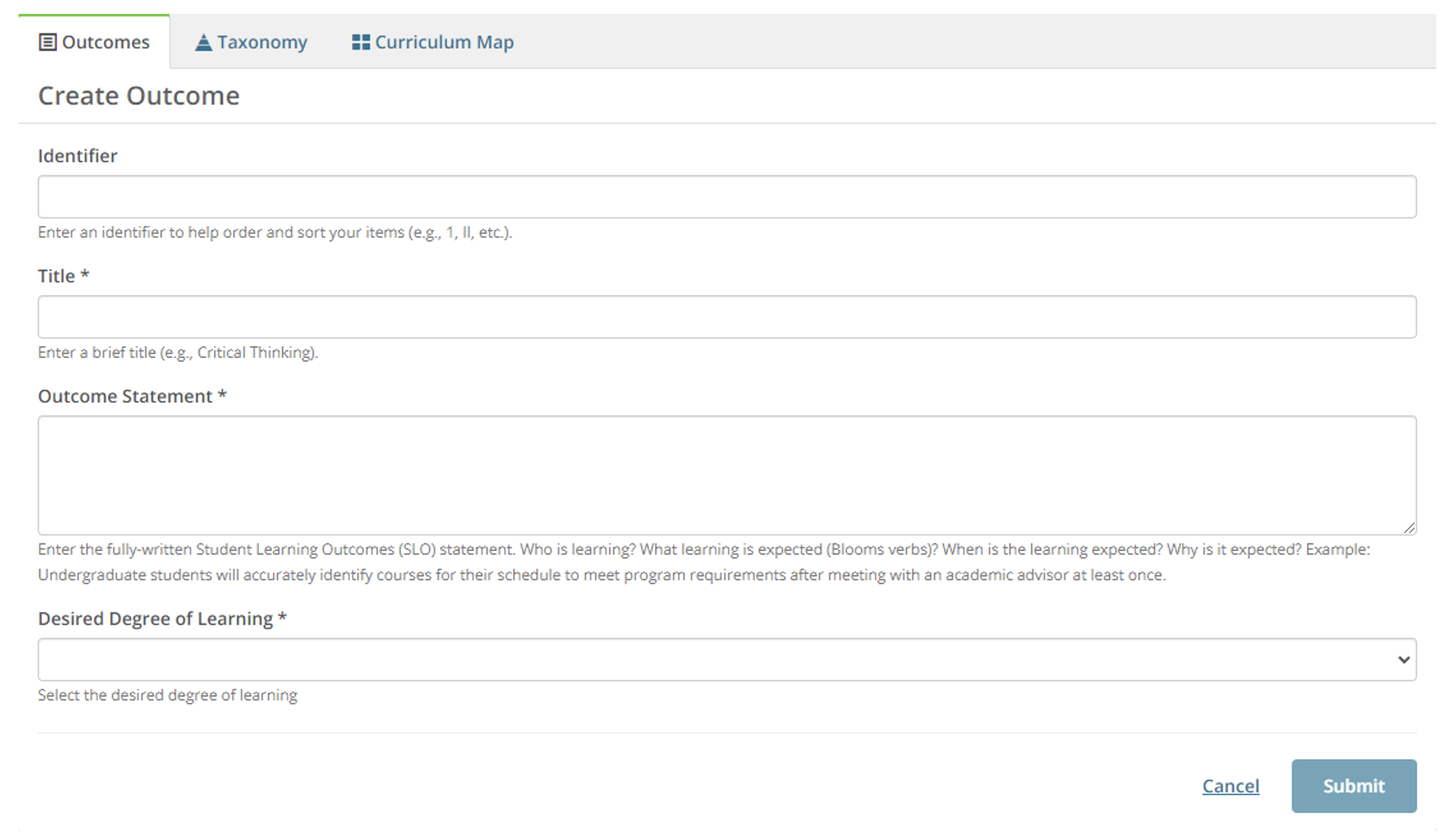

UAB Outcomes

To add Student Learning Outcomes to UAB Outcomes, use the following link: uab.campuslabs.com/outcomes.

Once there, you will be asked to complete the template below.

For additional question or assistance with UAB Outcomes platform, please reach out to the

American Association of Higher Education: Principles 7 & 8

Principle 7: Assessment makes a difference when it begins with issues of use and illuminates questions that people really care about. Assessment recognizes the value of information in the process of improvement. But to be useful, information must be connected to issues or questions that people really care about. This implies assessment approaches that produce evidence that relevant parties will find credible, suggestive, and applicable to decisions that need to be made. It means thinking in advance about how the information will be used, and by whom. The point of assessment is not to gather data and return “results”; it is a process that starts with the questions of decision-makers, that involves them in the gathering and interpreting of data, and that informs and helps guide continuous improvement.

Principle 8: Assessment is most likely to lead to improvement when it is part of a larger set of conditions that promote change. Assessment alone changes little. Its greatest contribution comes on campuses where the quality of teaching and learning is visibly valued and worked at. On such campuses, the push to improve educational performance is a visible and primary goal of leadership; improving the quality of undergraduate education is central to the institution’s planning, budgeting, and personnel decisions. On such campuses, information about learning outcomes is seen as an integral part of decision making, and avidly sought.

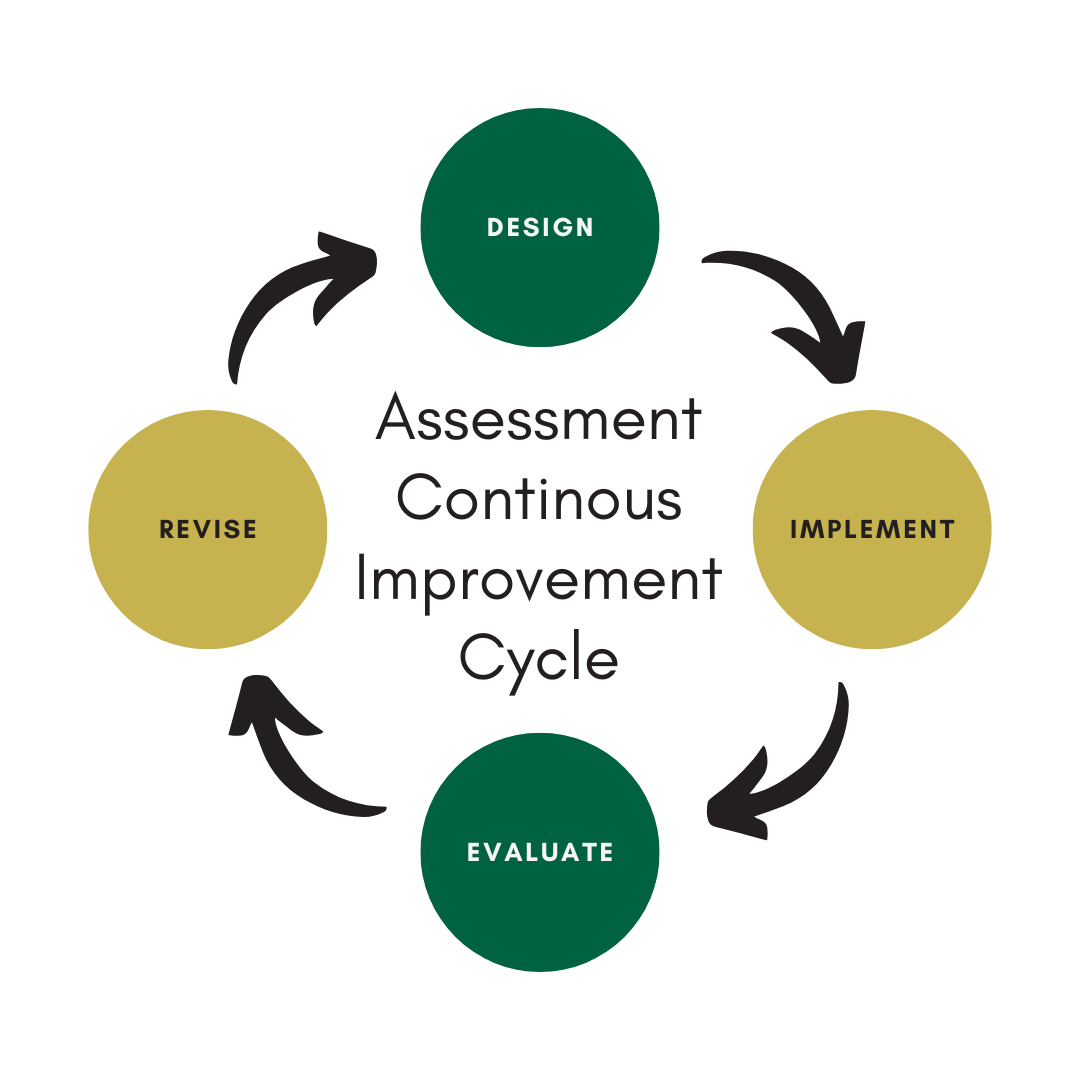

Continuous Improvement Cycle

Assessment should be an ever-changing, ongoing improving process. It should be a continual cycle of designing, implementing, evaluating, and revising Student Learning Outcomes, and teaching strategies. Each step of the process should be informed by both direct and indirect assessment measures as well as changes in overall Student Learning outcomes. As our disciplines and students evolve and progress over time so should our assessments.

Assessment should be an ever-changing, ongoing improving process. It should be a continual cycle of designing, implementing, evaluating, and revising Student Learning Outcomes, and teaching strategies. Each step of the process should be informed by both direct and indirect assessment measures as well as changes in overall Student Learning outcomes. As our disciplines and students evolve and progress over time so should our assessments.

- Student Learning Outcomes should be established to define the long-term outcomes of the program or course of study.

- What should the student know by the end of the program or course of study?

- Student Learning Outcomes statements should consist of an action verb and a statement that defines the knowledge/skill/ability that will be measured/observed/demonstrated.

- Course content should be designed to support and fulfill Student Learning Outcomes.

- How will I ensure the student learns the knowledge/skill/ability? What assessment measures will I use to assess the student’s progress?

- Program faculty and staff should implement action items as designed.

- Program faculty and staff should measure student achievement and collect data for further review.

- Program faculty and staff should evaluate and analyze the collected data to determine strengths and weaknesses of student learning and consider how their teaching practices contributed to Student Learning Outcomes.

- Program faculty and staff will make educated decisions on how to strengthen future student success.

- Program faculty and staff will integrate any content, assessment, or teaching method changes and assess the effects.

- Repeat steps 1-8.

Design

Implement

Evaluate

Revise

-

Analyzing Student Learning Outcomes Data

Focus foremost on collecting data that will inform you about student learning that matters. Standardized tools such as rubrics and exams are important in collecting “numbers,” but you can supplement the numbers with a holistic look at student learning based on the faculty members' experience and professional judgement about the student work they have reviewed.

A criterion level for each SLO should be identified in the program’s Assessment Plan. A criterion level selected by faculty is the minimum level students (not individual students, but all students in the major or minor; aggregated data) must achieve to demonstrate SLO achievement.

Examples of Targets:

- specific percentile score on a major field exam

- average performance scores

- narrative description of student performance

Quantitative Data – Assessment data measured numerically (counts, scores, percentages, etc.) are most often summarized using simple charts, graphs, tables, and descriptive statistics- mean, median, mode, standard deviation, percentage, etc. Deciding on which quantitative analysis method is best depends on (a) the specific assessment method (b) the type of data collected (nominal, ordinal interval, or ratio data) and (c) the audience receiving and using the results. No one analysis method is best but means (averages) and percentages are used most frequently. The UAB Assessment Team is available to consult on quantitative data analysis.

Qualitative Data – SLOs assessed using qualitative methods focus on words and descriptions and produce verbal or narrative data. These types of data are collected through focus groups, interviews, opened-ended questionnaires, and other less structured methodologies. Generally speaking, descriptions or words are more the preferred alternative. Abundant assistance on using qualitative assessment is available on the internet. The UAB Assessment Team is available to consult on qualitative data analysis. (NOTE: Many qualitative methods are “quantifiable;” the ability to summarize qualitative data using numbers. For example, art faculty use a numeric rubric to apply their professional judgment in assessing student portfolios.)

-

Disseminate, Discuss, Act

After the findings have been gathered and the Assessment Report created, the faculty should review the data and discuss potential areas of both positive as well as negative results. We recommend that this be done at a faculty meeting or annual retreat when there is more time for discussion. Other stakeholders may be included in these meetings and feedback should be captured to assist with Action Planning. Consistently archiving this documentation will help in writing the Annual Academic Plan.

Example of Reporting Format

Element in the Report Guiding Questions (some may not apply) Student Learning Outcome Type of Evidence Collected and date collected Sampling Criteria (if Sampling) - Who submitted data?

- How many students are in the program (or how many graduate each year)?

- How many were asked to participate in the study?

- How were they selected?

- How many participated?

- How many non-responses were there?

How the evidence was evaluated - What scoring rubric was used? (Include the rubric in the report)

- How many non-responses were there?

- What were the benchmark samples of student work? (Include those anchors in the report)

Timeline of key events - Where in the curriculum does the assessment fall?

- What level of learning should the student have at this point in the curriculum? (Licensure exam = mastery or clinical evaluation = developing)

Summary of results - How many students passed the exam?

- How many students scored "1," "2," "3," or "4"?

- How many faculty members agreed, disagreed, were neutral?

-

Take Action

Once SLO data has been analyzed, disseminated, and discussed, it is time to use the data to improve quality. That improvement may fall into multiple categories, but an action plan is always necessary.

Categories for improvement may include:

Elements of an action plan may include:

Instruction & Teaching Curricular Budget & Resource Academic Process - Adjust instruction

- Create a collaboration

- Revise Course Learning Outcomes

- Create new assessment tools

- Integrate other dimensions of significant learning

- Modify frequency of course offerings

- Add or remove courses

- Pedagogical models to be shared with faculty

- Revision of course content or assignments

- Add laboratory resources

- Add technology resources

- Hire or re-assign faculty or staff

- Alter classroom space

- Revise course prerequisites

- Revise criteria for admission

- Revise advising process

or protocols

Elements of an action plan may include:

Element Questions to consider Area for Improvement - What areas do the data suggest for improvement?

- If the target was met at 100%, should the target be adjusted? Should the outcome be adjusted?

- Could we consider a higher level of cognitive engagement?

Actions and timelines - What types of changes, adjustments, revisions, or additions could we make to the program to see growth in the area for improvement identified?

- How much time will the change require?

- Should we make changes gradually in phases? Are there other critical timelines to consider (accreditation, licensure, other evaluations)?

Resources needed - Time?

- Budgets?

- Staff?

- Location?

- Equipment?

Monitoring success - How and when is it appropriate to evaluate the impact of these changes?

- Formative evaluation? Who will help evaluate the change?