American Association of Higher Education: Principles 7 & 8

Principle 7: Assessment makes a difference when it begins with issues of use and illuminates questions that people really care about. Assessment recognizes the value of information in the process of improvement. But to be useful, information must be connected to issues or questions that people really care about. This implies assessment approaches that produce evidence that relevant parties will find credible, suggestive, and applicable to decisions that need to be made. It means thinking in advance about how the information will be used, and by whom. The point of assessment is not to gather data and return “results”; it is a process that starts with the questions of decision-makers, that involves them in the gathering and interpreting of data, and that informs and helps guide continuous improvement.

Principle 8: Assessment is most likely to lead to improvement when it is part of a larger set of conditions that promote change. Assessment alone changes little. Its greatest contribution comes on campuses where the quality of teaching and learning is visibly valued and worked at. On such campuses, the push to improve educational performance is a visible and primary goal of leadership; improving the quality of undergraduate education is central to the institution’s planning, budgeting, and personnel decisions. On such campuses, information about learning outcomes is seen as an integral part of decision making, and avidly sought.

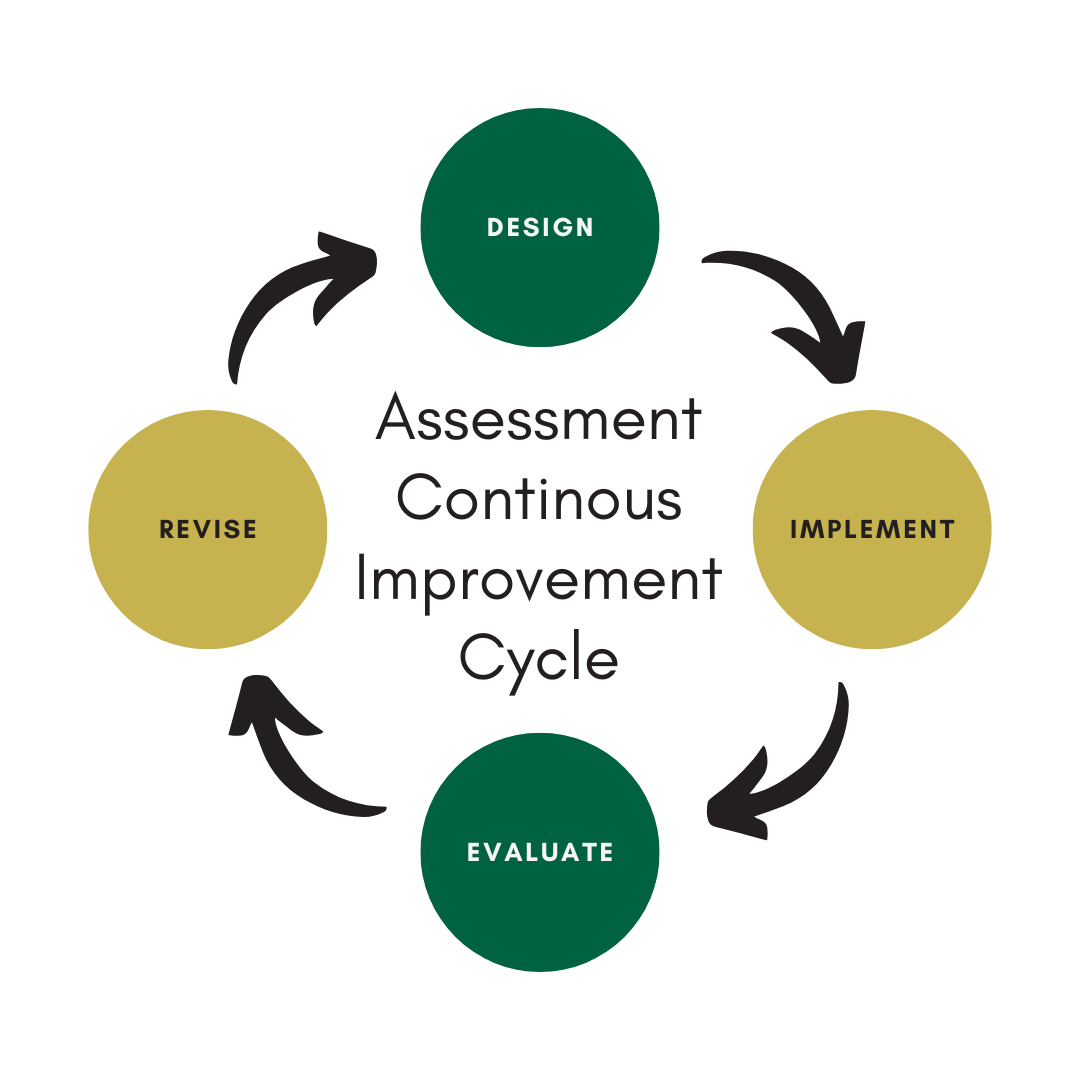

Continuous Improvement Cycle

Assessment should be an ever-changing, ongoing improving process. It should be a continual cycle of designing, implementing, evaluating, and revising Student Learning Outcomes, and teaching strategies. Each step of the process should be informed by both direct and indirect assessment measures as well as changes in overall Student Learning outcomes. As our disciplines and students evolve and progress over time so should our assessments.

Assessment should be an ever-changing, ongoing improving process. It should be a continual cycle of designing, implementing, evaluating, and revising Student Learning Outcomes, and teaching strategies. Each step of the process should be informed by both direct and indirect assessment measures as well as changes in overall Student Learning outcomes. As our disciplines and students evolve and progress over time so should our assessments.

- Student Learning Outcomes should be established to define the long-term outcomes of the program or course of study.

- What should the student know by the end of the program or course of study?

- Student Learning Outcomes statements should consist of an action verb and a statement that defines the knowledge/skill/ability that will be measured/observed/demonstrated.

- Course content should be designed to support and fulfill Student Learning Outcomes.

- How will I ensure the student learns the knowledge/skill/ability? What assessment measures will I use to assess the student’s progress?

- Program faculty and staff should implement action items as designed.

- Program faculty and staff should measure student achievement and collect data for further review.

- Program faculty and staff should evaluate and analyze the collected data to determine strengths and weaknesses of student learning and consider how their teaching practices contributed to Student Learning Outcomes.

- Program faculty and staff will make educated decisions on how to strengthen future student success.

- Program faculty and staff will integrate any content, assessment, or teaching method changes and assess the effects.

- Repeat steps 1-8.

Design

Implement

Evaluate

Revise

-

Analyzing Student Learning Outcomes Data

Focus foremost on collecting data that will inform you about student learning that matters. Standardized tools such as rubrics and exams are important in collecting “numbers,” but you can supplement the numbers with a holistic look at student learning based on the faculty members' experience and professional judgement about the student work they have reviewed.

A criterion level for each SLO should be identified in the program’s Assessment Plan. A criterion level selected by faculty is the minimum level students (not individual students, but all students in the major or minor; aggregated data) must achieve to demonstrate SLO achievement.

Examples of Targets:

- specific percentile score on a major field exam

- average performance scores

- narrative description of student performance

Quantitative Data – Assessment data measured numerically (counts, scores, percentages, etc.) are most often summarized using simple charts, graphs, tables, and descriptive statistics- mean, median, mode, standard deviation, percentage, etc. Deciding on which quantitative analysis method is best depends on (a) the specific assessment method (b) the type of data collected (nominal, ordinal interval, or ratio data) and (c) the audience receiving and using the results. No one analysis method is best but means (averages) and percentages are used most frequently. The UAB Assessment Team is available to consult on quantitative data analysis.

Qualitative Data – SLOs assessed using qualitative methods focus on words and descriptions and produce verbal or narrative data. These types of data are collected through focus groups, interviews, opened-ended questionnaires, and other less structured methodologies. Generally speaking, descriptions or words are more the preferred alternative. Abundant assistance on using qualitative assessment is available on the internet. The UAB Assessment Team is available to consult on qualitative data analysis. (NOTE: Many qualitative methods are “quantifiable;” the ability to summarize qualitative data using numbers. For example, art faculty use a numeric rubric to apply their professional judgment in assessing student portfolios.)

-

Disseminate, Discuss, Act

After the findings have been gathered and the Assessment Report created, the faculty should review the data and discuss potential areas of both positive as well as negative results. We recommend that this be done at a faculty meeting or annual retreat when there is more time for discussion. Other stakeholders may be included in these meetings and feedback should be captured to assist with Action Planning. Consistently archiving this documentation will help in writing the Annual Academic Plan.

Example of Reporting Format

Element in the Report Guiding Questions (some may not apply) Student Learning Outcome Type of Evidence Collected and date collected Sampling Criteria (if Sampling) - Who submitted data?

- How many students are in the program (or how many graduate each year)?

- How many were asked to participate in the study?

- How were they selected?

- How many participated?

- How many non-responses were there?

How the evidence was evaluated - What scoring rubric was used? (Include the rubric in the report)

- How many non-responses were there?

- What were the benchmark samples of student work? (Include those anchors in the report)

Timeline of key events - Where in the curriculum does the assessment fall?

- What level of learning should the student have at this point in the curriculum? (Licensure exam = mastery or clinical evaluation = developing)

Summary of results - How many students passed the exam?

- How many students scored "1," "2," "3," or "4"?

- How many faculty members agreed, disagreed, were neutral?

-

Take Action

Once SLO data has been analyzed, disseminated, and discussed, it is time to use the data to improve quality. That improvement may fall into multiple categories, but an action plan is always necessary.

Categories for improvement may include:

Elements of an action plan may include:

Instruction & Teaching Curricular Budget & Resource Academic Process - Adjust instruction

- Create a collaboration

- Revise Course Learning Outcomes

- Create new assessment tools

- Integrate other dimensions of significant learning

- Modify frequency of course offerings

- Add or remove courses

- Pedagogical models to be shared with faculty

- Revision of course content or assignments

- Add laboratory resources

- Add technology resources

- Hire or re-assign faculty or staff

- Alter classroom space

- Revise course prerequisites

- Revise criteria for admission

- Revise advising process

or protocols

Elements of an action plan may include:

Element Questions to consider Area for Improvement - What areas do the data suggest for improvement?

- If the target was met at 100%, should the target be adjusted? Should the outcome be adjusted?

- Could we consider a higher level of cognitive engagement?

Actions and timelines - What types of changes, adjustments, revisions, or additions could we make to the program to see growth in the area for improvement identified?

- How much time will the change require?

- Should we make changes gradually in phases? Are there other critical timelines to consider (accreditation, licensure, other evaluations)?

Resources needed - Time?

- Budgets?

- Staff?

- Location?

- Equipment?

Monitoring success - How and when is it appropriate to evaluate the impact of these changes?

- Formative evaluation? Who will help evaluate the change?